Towards reducing visual workload in surgical navigation: proof-of-concept of an augmented reality haptic guidance system

(opens in new tab)

(opens in new tab)

Venue. Computer Methods in Biomechanics and Biomedical Engineering: Imaging & Visualization (2022)

Materials.

DOI(opens in new tab)

PDF(opens in new tab)

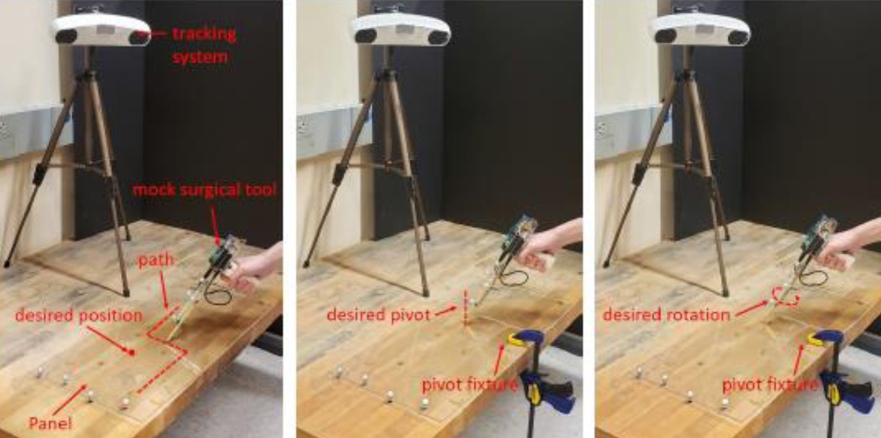

Abstract. The integration of planning and navigation capabilities into the operating room has enabled surgeons take on more precise procedures. Traditionally, planning and navigation information is presented using monitors in the surgical theatre. But the monitors force the surgeon to frequently look away from the surgical area. Augmented reality technologies have enabled surgeons to visualise navigation information in-situ. However, burdening the visual field with additional information can be distracting. We propose integrating haptic feedback into a surgical tool handle to enable surgical guidance capabilities. This property reduces the amount of visual information, freeing surgeons to maintain visual attention over the patient and the surgical site. To investigate the feasibility of this guidance paradigm we conducted a pilot study with six subjects. Participants traced paths, pinpointed locations and matched alignments with a mock surgical tool featuring a novel haptic handle. We collected quantitative data, tracking user’s accuracy and time to completion as well as subjective cognitive load. Our results show that haptic feedback can guide participants using a tool to sub-millimetre and sub-degree accuracy with only little training. Participants were able to match a location with an average error of 0.82mm, desired pivot alignments with an average error of 0.83° and desired rotations to 0.46°.

Link to this page: